Large language model ( LLM )-based AI assistants are powerful productivity tools, but without the right context and information, they can struggle to provide nuanced, relevant answers. While most LLM-based chat apps allow users to supply a few files for context, they often don’t have access to all the information buried across slides, notes, PDFs and images in a user’s PC.

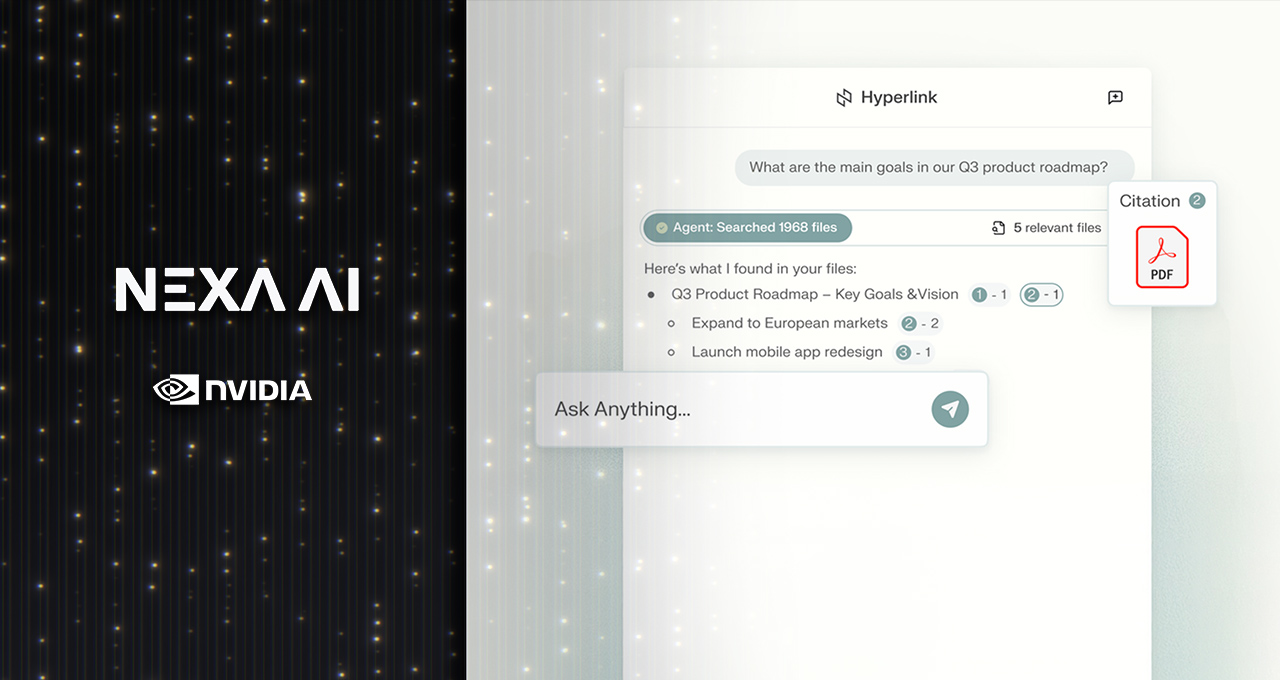

Nexa.ai’s Hyperlink is a local AI agent that addresses this challenge. It can quickly index thousands of files, understand the intent of a user’s question and provide contextual, tailored insights.

A new version of the app, available today, includes accelerations for NVIDIA RTX AI PCs, tripling retrieval-augmented generation indexing speed. For example, a dense 1GB folder that would previously take almost 15 minutes to index can now be ready for search in just four to five minutes. In addition, LLM inference is accelerated by 2x for faster responses to user queries.

Hyperlink uses generative AI to search thousands of files for the right information, understanding the intent and context of a user’s query, rather than merely matching keywords.

To do this, it creates a searchable index of all local files a user indicates — whether a small folder or every single file on a computer. Users can describe what they’re looking for in natural language and find relevant content across documents, slides, PDFs and images.

Combining search with the reasoning capabilities of RTX-accelerated LLMs, Hyperlink then answers questions based on insights from a user’s files. It connects ideas across sources, identifies relationships between documents and generates well-reasoned answers with clear citations.

All user data stays on the device and is kept private. This means personal files never leave the computer, so users don’t have to worry about sensitive information being sent to the cloud. They get the benefits of powerful AI without sacrificing control or peace of mind.

Hyperlink is already being adopted by professionals, students and creators to:

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://blogs.nvidia.com/blog/rtx-ai-garage-nexa-hyperlink-local-agent/#content

- https://www.nvidia.com/en-us/

- https://blogs.nvidia.com/?s=

- Minisforum launches its first ARM-based Mini PC with a full x16 PCIe slot for discrete GPUs — The MS-R1 packs a 12-core Cixin P1 SoC with up to 64 GB of RAM and

- NVIDIA Open Sources Aerial Software to Accelerate AI-Native 6G

- Biwin NV7200 2TB SSD Review: The Budget Winner

- How NVIDIA GeForce RTX GPUs Power Modern Creative Workflows

- J.P. Morgan calls out AI spend, says $650 billion in annual revenue required to deliver mere 10% return on AI buildout — equivalent to $35 payment from every iP

Informational only. No financial advice. Do your own research.