Large language model ( LLM )-based AI assistants are powerful productivity tools, but without the right context and information, they can struggle to provide nuanced, relevant answers. While most LLM-based chat apps allow users to supply a few files for context, they often don’t have access to all the information buried across slides, notes, PDFs and images in a user’s PC.

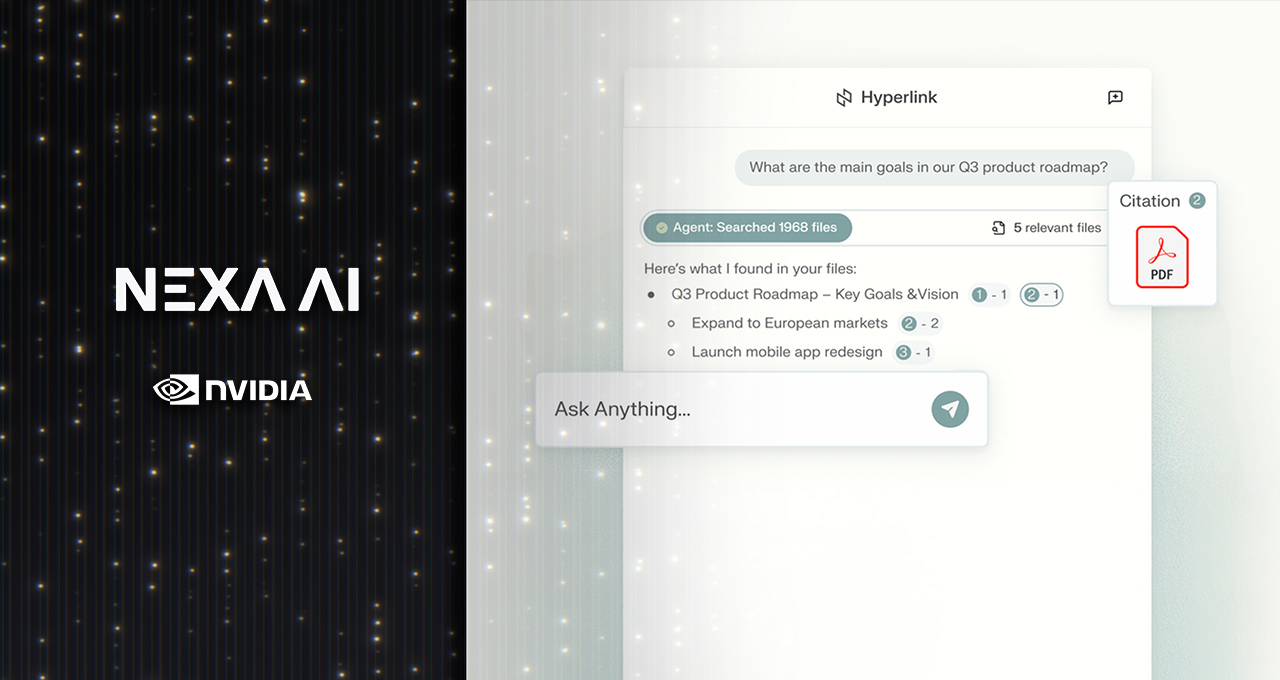

Nexa.ai’s Hyperlink is a local AI agent that addresses this challenge. It can quickly index thousands of files, understand the intent of a user’s question and provide contextual, tailored insights.

A new version of the app, available today, includes accelerations for NVIDIA RTX AI PCs, tripling retrieval-augmented generation indexing speed. For example, a dense 1GB folder that would previously take almost 15 minutes to index can now be ready for search in just four to five minutes. In addition, LLM inference is accelerated by 2x for faster responses to user queries.

Hyperlink uses generative AI to search thousands of files for the right information, understanding the intent and context of a user’s query, rather than merely matching keywords.

To do this, it creates a searchable index of all local files a user indicates — whether a small folder or every single file on a computer. Users can describe what they’re looking for in natural language and find relevant content across documents, slides, PDFs and images.

Combining search with the reasoning capabilities of RTX-accelerated LLMs, Hyperlink then answers questions based on insights from a user’s files. It connects ideas across sources, identifies relationships between documents and generates well-reasoned answers with clear citations.

All user data stays on the device and is kept private. This means personal files never leave the computer, so users don’t have to worry about sensitive information being sent to the cloud. They get the benefits of powerful AI without sacrificing control or peace of mind.

Hyperlink is already being adopted by professionals, students and creators to:

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://blogs.nvidia.com/blog/rtx-ai-garage-nexa-hyperlink-local-agent/#content

- https://www.nvidia.com/en-us/

- https://blogs.nvidia.com/?s=

- Intel's next-gen Granite Rapids-WS server CPU lineup leaked — Xeon 654 18-core chip posts solid numbers in early Geekbench listing

- Deutsche Telekom and NVIDIA Launch Industrial AI Cloud — a New Era for Germany’s Industrial Transformation

- Intel's next-gen Granite Rapids-WS server CPU lineup leaked — Xeon 654 18-core chip posts solid numbers in early Geekbench listing

- Microsoft Azure Blocks Largest DDoS Attack in History — attack equivalent to streaming 3.5 million Netflix movies at once, 15.72 Terabits per Second from 500,00

- Call of Duty: Black Ops 7 campaign requires a constant online connection and has zero checkpoints — mission levels are designed for four, necessitating repetiti

Informational only. No financial advice. Do your own research.