Inside the AI accelerator arms race: AMD, Nvidia, and hyperscalers commit to annual releases through the decade

We’re delighted by Google’s success — they’ve made great advances in AI and we continue to supply to Google.NVIDIA is a generation ahead of the industry — it’s the only platform that runs every AI model and does it everywhere computing is done.NVIDIA offers greater… November 25, 2025

Google’s TPUs are application-specific chips, tuned for high-throughput matrix operations central to large language model training and inference. The current-generation TPU v5p features 95 gigabytes of HBM3 memory and a bfloat16 peak throughput of more than 450 TFLOPS per chip. TPU v5p pods can contain nearly 9,000 chips and are designed to scale efficiently inside Google Cloud’s infrastructure.

Crucially, Google owns the TPU architecture, instruction set, and software stack. Broadcom acts as Google's silicon implementation partner, converting Google’s architecture into a manufacturable ASIC layout. Broadcom also supplies high-speed SerDes, power management, packaging, and handles post-fabrication testing. Chip fabrication is performed by TSMC itself.

By contrast, Nvidia’s Hopper-based H100 GPU includes 80 billion transistors , 80 gigabytes of HBM3 memory, and delivers up to 4 PFLOPS of AI performance using FP8 precision. Its successor, the Blackwell-based GB200 , increases HBM capacity to 192 gigabytes and peak throughput to around 20 PFLOPS. It’s also designed to work seamlessly in tandem with Grace CPUs in hybrid configurations, expanding Nvidia’s presence in both the cloud and emerging local compute nodes.

TPUs are programmed via Google’s XLA compiler stack, which serves as the backend for frameworks like JAX and TensorFlow. While the XLA-based approach offers performance portability across CPU, GPU, and TPU targets, it typically requires model developers to adopt specific libraries and compilation patterns tailored to Google’s runtime environment.

By contrast, Nvidia’s stack is broader and more deeply embedded in industry workflows. CUDA, cuDNN, TensorRT, and related developer tools form the default substrate for large-scale AI development and deployment. This tooling spans model optimization, distributed training, mixed-precision scheduling, and low-latency inference, all backed by a mature ecosystem of frameworks, pretrained models, and commercial support.

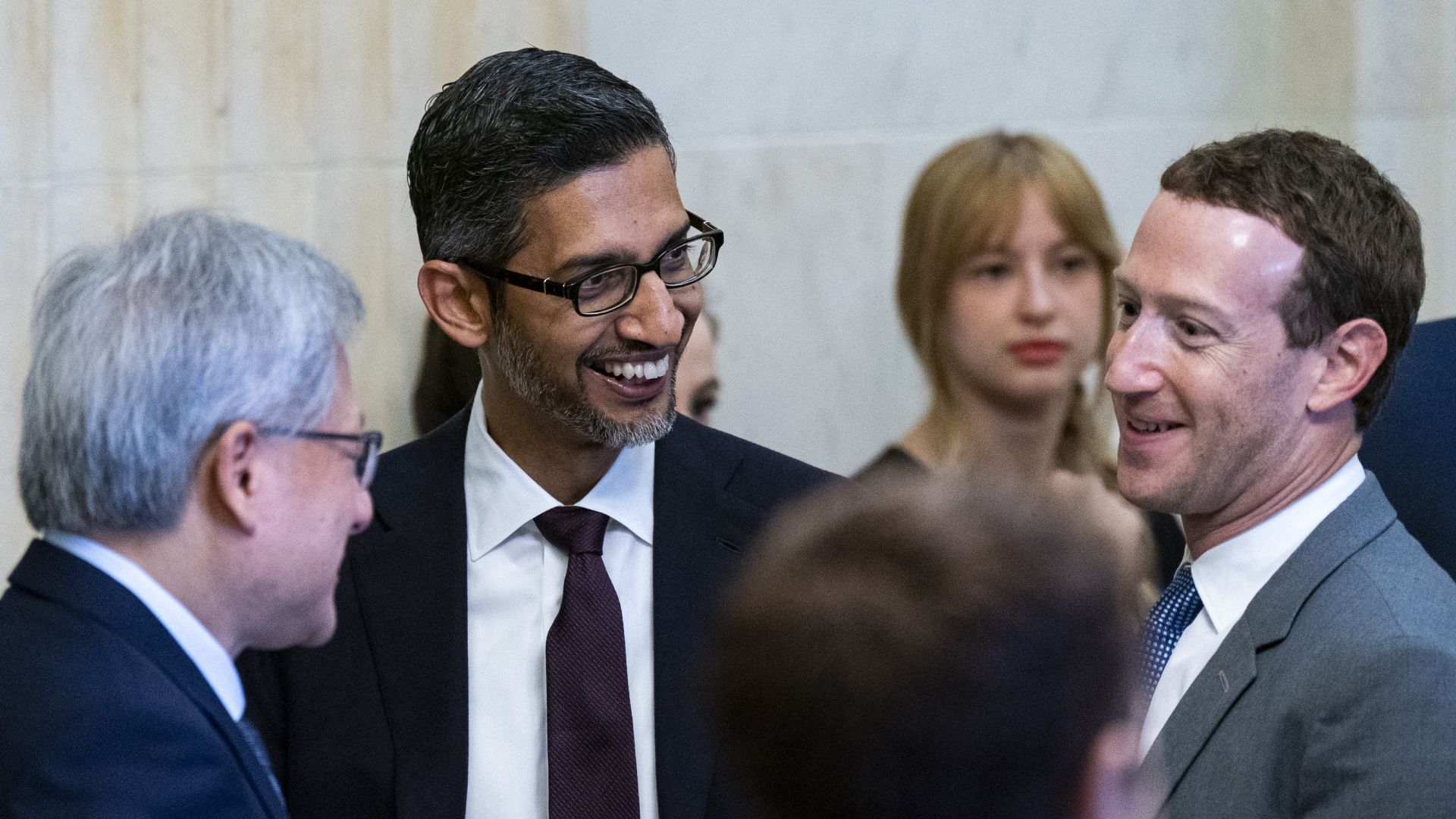

As a result, moving from CUDA to XLA is no trivial task. Developers must rewrite or re-tune code, manage different performance bottlenecks, and in some cases adopt entirely new frameworks. Meta has internal JAX development and is better positioned than most to experiment, but friction remains a gating factor for wider TPU adoption.

According to Reuters , some Google Cloud executives believe the Meta deal could generate revenue equal to as much as 10% of Nvidia’s current annual data center business. That is, of course, a speculative figure, but Google has already committed to delivering as many as one million TPUs to Anthropic and is pushing its XLA and JAX stack hard among AI startups looking for alternatives to CUDA.

OpenAI might be building its own chip, but it’ll still be dependent on Nvidia

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://www.tomshardware.com/tech-industry/semiconductors/SPONSORED_LINK_URL

- https://www.tomshardware.com/tech-industry/semiconductors/nvidia-responds-as-meta-explores-switch-to-google-tpus#main

- https://www.tomshardware.com

- EUV laser-maker Trumpf explores quantum computing to improve laser tech

- Major insurers move to avoid liability for AI lawsuits as multi-billion dollar risks emerge — Recent public incidents have lead to costly repercussions

- Major insurers move to avoid liability for AI lawsuits as multi-billion dollar risks emerge — Recent public incidents have lead to costly repercussions

- An entire PS5 now costs less than 64GB of DDR5 memory, even after a discount — simple memory kit jumps to $600 due to DRAM shortage, and it's expected to get wo

- Rapidus to start construction on 1.4nm fab in 2027 — research and development on node to begin next year

Informational only. No financial advice. Do your own research.