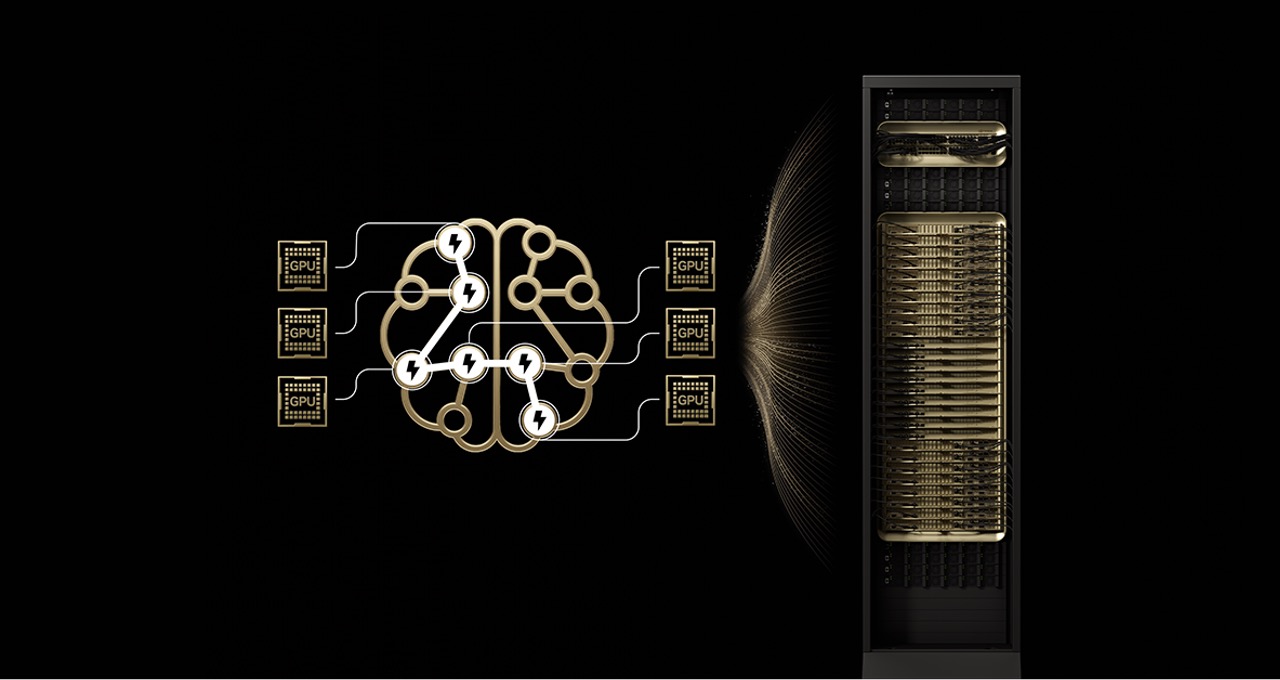

Reducing the number of experts per GPU : Distributing experts across up to 72 GPUs reduces the number of experts per GPU, minimizing parameter-loading pressure on each GPU’s high-bandwidth memory. Fewer experts per GPU also frees up memory space, allowing each GPU to serve more concurrent users and support longer input lengths.

Accelerating expert communication : Experts spread across GPUs can communicate with each other instantly using NVLink. The NVLink Switch also has the compute power needed to perform some of the calculations required to combine information from various experts, speeding up delivery of the final answer.

Other full-stack optimizations also play a key role in unlocking high inference performance and lower cost per token for MoE models. The NVIDIA Dynamo framework orchestrates disaggregated serving by assigning prefill and decode tasks to different GPUs, allowing decode to run with large expert parallelism, while prefill uses parallelism techniques better suited to its workload. The NVFP4 format helps maintain accuracy while further boosting performance and efficiency.

Open-source inference frameworks such as NVIDIA TensorRT-LLM, SGLang and vLLM support these optimizations for MoE models. NVIDIA TensorRT-LLM and SGLang have played a significant role in advancing large-scale MoE deployment on GB200 NVL72, helping validate and mature many of the techniques used today.

To bring this performance to enterprises worldwide, the GB200 NVL72 is being deployed by major cloud service providers and NVIDIA Cloud Partners including Amazon Web Services, Core42, CoreWeave, Crusoe, Google Cloud, Lambda, Microsoft Azure, Nebius, Nscale, Oracle Cloud Infrastructure, Together AI and others.

“At CoreWeave, our customers are leveraging our platform to put mixture-of-experts models into production as they build agentic workflows,” said Peter Salanki, cofounder and chief technology officer at CoreWeave. “By working closely with NVIDIA, we are able to deliver a tightly integrated platform that brings MoE performance, scalability and reliability together in one place. You can only do that on a cloud purpose-built for AI.”

Customers such as DeepL are using Blackwell NVL72 rack-scale design to build and deploy their next-generation AI models.

“DeepL is leveraging NVIDIA GB200 hardware to train mixture-of-experts models, advancing its model architecture to improve efficiency during training and inference, setting new benchmarks for performance in AI,” said Paul Busch, research team lead at DeepL.

NVIDIA GB200 NVL72 efficiently scales complex MoE models and delivers a 10x leap in performance per watt.

This performance leap isn’t just a benchmark; it lowers the cost -per -token by over 10x, transforming the economics of AI at scale in power- and cost-constrained data centers.

Simply put, because GB200 NVL72 processes ten times as many tokens using the same time and power, the cost required to generate each individual token drops. GB200 NVL72’s order-of-magnitude efficiency gains far outweigh its incremental increase in costs, resulting in significantly better token economics.

As shown in the SemiAnalysis InferenceMax results for DeepSeek-R1, this efficiency delivers more than a 10x reduction in cost per million tokens compared to NVIDIA H200 systems. This dramatic shift allows enterprises to deploy complex “thinking” MoE models into everyday products.

This 10x performance leap extends to other frontier models as well, significantly reducing cost per token.

“With GB200 NVL72 and Together AI’s custom optimizations, we are exceeding customer expectations for large-scale inference workloads for MoE models like DeepSeek-V3,” said Vipul Ved Prakash, cofounder and CEO of Together AI. “The performance gains come from NVIDIA’s full-stack optimizations coupled with Together AI Inference breakthroughs across kernels, runtime engine and speculative decoding.”

Kimi K2 Thinking, the most intelligent open-source model, serves as another proof point, achieving 10x better generational performance when deployed on GB200 NVL72.

Fireworks AI has currently deployed Kimi K2 on the NVIDIA B200 platform to achieve the highest performance on the Artificial Analysis leaderboard .

“NVIDIA GB200 NVL72 rack-scale design makes MoE model serving dramatically more efficient,” said Lin Qiao, cofounder and CEO of Fireworks AI. “Looking ahead, NVL72 has the potential to transform how we serve massive MoE models, delivering major performance improvements over the Hopper platform and setting a new bar for frontier model speed and efficiency.”

Mistral Large 3 also achieved a 10x performance gain on the GB200 NVL72 compared with the prior-generation H200. This generational gain translates into better user experience, lower per-token cost and higher energy efficiency for this new MoE model.

The NVIDIA GB200 NVL72 rack-scale system is designed to deliver strong performance and lower token costs beyond MoE models.

The reason becomes clear when taking a look at where AI is heading: the newest generation of multimodal AI models have specialized components for language, vision, audio and other modalities, activating only the ones relevant to the task at hand.

In agentic systems, different “agents” specialize in planning, perception, reasoning, tool use or search, and an orchestrator coordinates them to deliver a single outcome. In both cases, the core pattern mirrors MoE: route each part of the problem to the most relevant experts, then coordinate their outputs to produce the final outcome.

Extending this principle to production environments where multiple applications and agents serve multiple users unlocks new levels of efficiency. Instead of duplicating massive AI models for every agent or application, this approach can enable a shared pool of experts accessible to all, with each request routed to the right expert.

Mixture of experts is a powerful architecture moving the industry toward a future where massive capability, efficiency and scale coexist. The GB200 NVL72 unlocks this potential today, and NVIDIA’s roadmap with the NVIDIA Vera Rubin architecture will continue to expand the horizons of frontier models.

Learn more about how GB200 NVL72 scales complex MoE models in this technical deep dive .

This post is part of Think SMART , a series focused on how leading AI service providers, developers and enterprises can boost their inference performance and return on investment with the latest advancements from NVIDIA’s full-stack inference platform .

How to Fine-Tune an LLM on NVIDIA GPUs With Unsloth

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://blogs.nvidia.com/blog/mixture-of-experts-frontier-models/#content

- https://www.nvidia.com/en-us/

- https://blogs.nvidia.com/?s=

- ASRock's blazing fast 27-inch 520Hz monitor is only $238 — at $290 off, ASRock's IPS 1080p Phantom Gaming panel is its lowest price ever on Newegg

- New 1.4nm nanoimprint lithography template could reduce the need for EUV steps in advanced process nodes — questions linger as no foundry has yet committed to n

- NVIDIA and AWS Expand Full-Stack Partnership, Providing the Secure, High-Performance Compute Platform Vital for Future Innovation

- AMD, Intel, and TI are ‘merchants of death’ says lawyer representing Ukrainian civilians — five new suits complain that Russian drones and missiles continue to

- Commodore International challenges Italian rival’s trademarks in escalating brand dispute — firm says clarity needed to clear the path for new licensed products

Informational only. No financial advice. Do your own research.