The world’s smallest AI supercomputer: NVIDIA DGX Spark represents the cutting edge of AI computing hardware for enterprise and research applications.

Humanoids and robot dogs, up close: Unitree robots are on display, captivating attendees with advanced mobility powered by the latest robotics technology.

Why open source is important: Learn how it can empower developers to build stronger communities, iterate on features, and seamlessly integrate the best of open source AI.

A study from the Center for Security and Emerging Technology (CSET) published today shows how access to open model weights unlocks more opportunities for experimentation, customization and collaboration across the global research community.

The report outlines seven high-impact research use cases where open models are making a difference — including fine-tuning, continued pretraining, model compression and interpretability.

With access to weights, developers can adapt models for new domains, explore new architectures and extend functionality to meet their specific needs. This also supports trust and reproducibility. When teams can run experiments on their own hardware, share updates and revisit earlier versions, they gain control and confidence in their results.

Additionally, the study found that nearly all open model users share their data, weights and code, building a fast-growing culture of collaboration. This open exchange of tools and knowledge strengthens partnerships between academia, startups and enterprises, facilitating innovation.

NVIDIA is committed to empowering the research community through the NVIDIA Nemotron family of open models — featuring not just open weights, but also pretraining and post-training datasets, detailed training recipes, and research papers that share the latest breakthroughs.

Read the full CSET study to learn how open models are helping the AI community move forward.

At the PyTorch Conference, Jim Fan, director of robotics and distinguished research scientist at NVIDIA, discussed the Physical Turing Test — a way of measuring the performance of intelligent machines in the physical world.

With conversational AI now capable of fluent, lifelike communication, Fan noted that the next challenge is enabling machines to act with similar naturalism. The Physical Turing Test asks: can an intelligent machine perform a real-world task so fluidly that a human cannot tell whether a person or a robot completed it?

Fan highlighted that progress in embodied AI and physical AI depends on generating large amounts of diverse data, access to open robot foundation models and simulation frameworks — and walked through a unified workflow for developing embodied AI.

With synthetic data workflows like NVIDIA Isaac GR00T-Dreams — built on NVIDIA Cosmos world foundation models — developers can generate virtual worlds from images and prompts, speeding the creation of large sets of diverse and physically accurate data.

That data can then be used to post-train NVIDIA Isaac GR00T N open foundation models for generalized humanoid robot reasoning and skills. But before the models are deployed in the real world, these new robot skills need to be tested in simulation .

Open simulation and learning frameworks such as NVIDIA Isaac Sim and Isaac Lab allow robots to “practice” countless times across millions of virtual environments before operating in the real world, dramatically accelerating learning and deployment cycles.

Plus, with Newton , an open-source, differentiable physics engine built on NVIDIA Warp and OpenUSD, developers can bring high-fidelity simulation to complex robotic dynamics such as motion, balance and contact — reducing the simulation-to-real gap.

This accelerates the creation of physically capable AI systems that learn faster, perform more safely and operate effectively in real-world environments.

However, scaling embodied intelligence isn’t just about compute — it’s about access. Fan reaffirmed NVIDIA’s commitment to open source, emphasizing how the company’s frameworks and foundation models are shared to empower developers and researchers globally.

Developers can get started with NVIDIA’s open embodied and physical AI models on Hugging Face .

NVIDIA’s Llama‑Embed‑Nemotron‑8B model has been recognized as the top open and portable model on the Multilingual Text Embedding Benchmark leaderboard.

Built on the meta‑llama/Llama‑3.1‑8B architecture, Llama‑Embed‑Nemotron‑8B is a research text embedding model that converts text into 4,096‑dimensional vector representations. Designed for flexibility, it supports a wide range of use cases, including retrieval, reranking, semantic similarity and classification across more than 1,000 languages.

Trained on a diverse collection of 16 million query–document pairs — half from public sources and half synthetically generated — the model benefits from refined data generation techniques, hard‑negative mining and model‑merging approaches that contribute to its broad generalization capabilities.

This result builds on NVIDIA’s ongoing research in open, high‑performing AI models. Following earlier leaderboard recognition for the Llama NeMo Retriever ColEmbed model, the success of Llama‑Embed‑Nemotron‑8B highlights the value of openness, transparency and collaboration in advancing AI for the developer community.

Check out Llama-Embed-Nemotron-8B on Hugging Face , and learn more about the model, including architectural highlights, training methodology and performance evaluation.

Open models are shaping the future of AI, enabling developers, enterprises and governments to innovate with transparency, customization and trust. In the latest episode of the NVIDIA AI Podcast, NVIDIA’s Bryan Catanzaro and Jonathan Cohen discuss how open models, datasets and research are laying the foundation for shared progress across the AI ecosystem.

The NVIDIA Nemotron family of open models represents a full-stack approach to AI development, connecting model design to the underlying hardware and software that power it. By releasing Nemotron models, data and training methodologies openly, NVIDIA aims to help others refine, adapt and build upon its work, resulting in a faster exchange of ideas and more efficient systems.

“When we as a community come together — contributing ideas, data and models — we all move faster,” said Catanzaro in the episode. “Open technologies make that possible.”

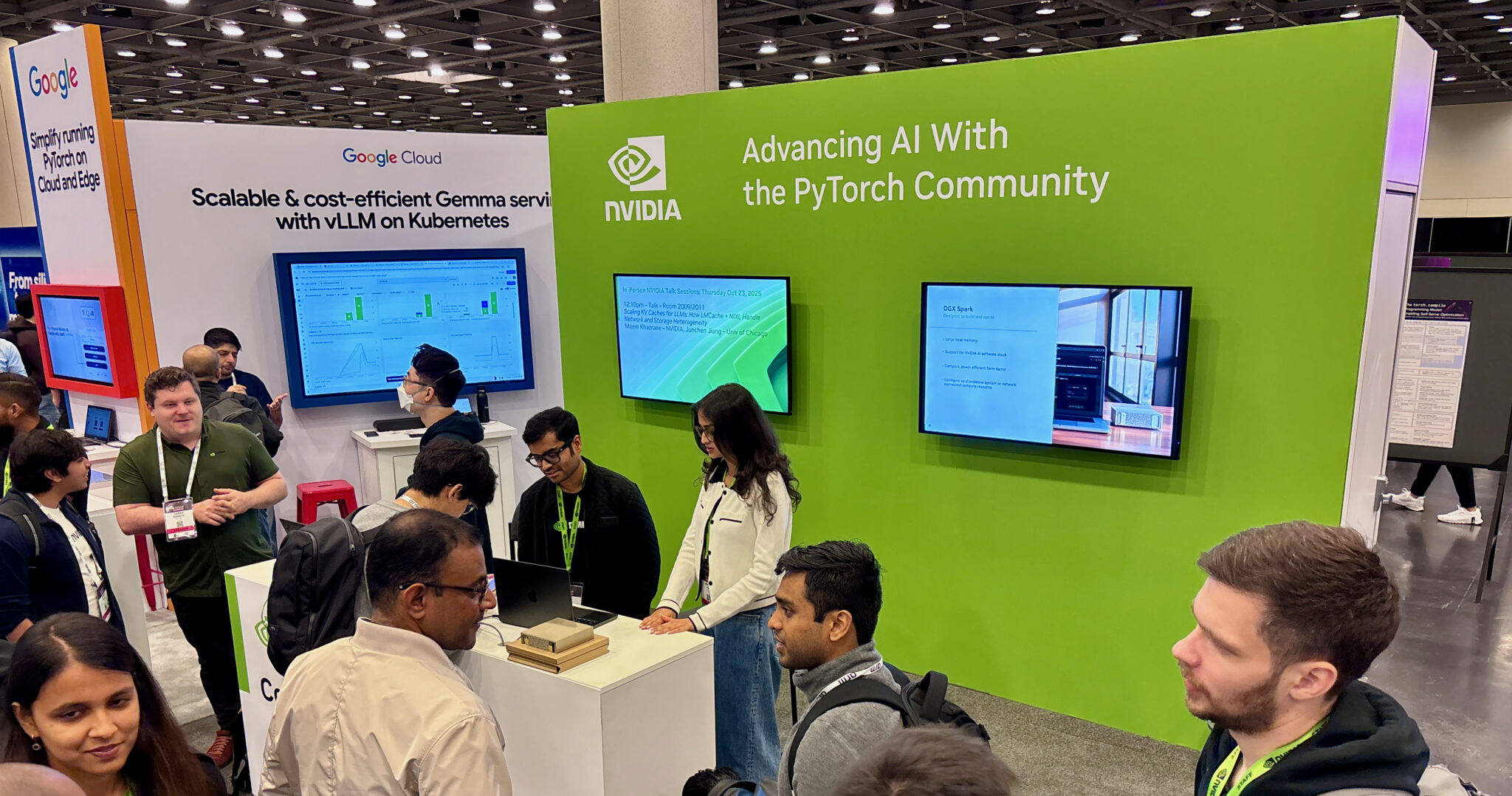

There’s more happening this week at Open Source AI Week, including the start of the PyTorch Conference — bringing together developers, researchers and innovators pushing the boundaries of open AI.

Attendees can tune in to the special keynote address by Jim Fan, director of robotics and distinguished research scientist at NVIDIA, to hear the latest advancements in robotics — from simulation and synthetic data to accelerated computing. The keynote, titled “The Physical Turing Test: Solving General Purpose Robotics,” will take place on Wednesday, Oct. 22, from 9:50-10:05 a.m. PT.

Computer scientist Andrej Karpathy recently introduced Nanochat, calling it “the best ChatGPT that $100 can buy.” Nanochat is an open-source, full-stack large language model (LLM) implementation built for transparency and experimentation. In about 8,000 lines of minimal, dependency-light code, Nanochat runs the entire LLM pipeline — from tokenization and pretraining to fine-tuning, inference and chat — all through a simple web user interface. NVIDIA is supporting Karpathy’s open-source Nanochat project by releasing two NVIDIA Launchables, making it easy to deploy and experiment with Nanochat across various NVIDIA GPUs.

With NVIDIA Launchables, developers can train and interact with their own conversational model in hours with a single click. The Launchables dynamically support different-sized GPUs — including NVIDIA H100 and L40S GPUs — on various clouds without need for modification. They also automatically work on any eight-GPU instance on NVIDIA Brev , so developers can get compute access immediately.

The first 10 users to deploy these Launchables will also receive free compute access to NVIDIA H100 or L40S GPUs.

Start training with Nanochat by deploying a Launchable:

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://blogs.nvidia.com/blog/open-source-ai-week/#content

- https://www.nvidia.com/en-us/

- https://blogs.nvidia.com/?s=

- Into the Omniverse: Open World Foundation Models Generate Synthetic Worlds for Physical AI Development

- Save $40 on 1TB of Switch 2 storage at Costco — Lexar's Play Pro microSD Express card drops to $179.99

- Creative Labs revives Sound Blaster brand with modular audio hub — Re:Imagine is a tactile Stream Deck competitor, aimed at creators and audiophiles

- Save up to £200 on a 3D printer in Bambu Lab's UK Black Friday sale — massive savings on 3D printers and accessories

- Elon Musk says idling Tesla cars could create massive 100-million-vehicle strong computer for AI — 'bored' vehicles could offer 100 gigawatts of distributed com

Informational only. No financial advice. Do your own research.