Follow Tom's Hardware on Google News , or add us as a preferred source , to get our latest news, analysis, & reviews in your feeds.

Anton Shilov Social Links Navigation Contributing Writer Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

usertests I can't wait for 3D DRAM so that 128 GB looks like the new 8 GB. Actually, I can wait 8 years. Reply

bit_user usertests said: I can't wait for 3D DRAM so that 128 GB looks like the new 8 GB. Actually, I can wait 8 years. I often wonder at just how it is that we're using so much RAM. I get that higher speeds require larger buffers, more threads mean more stacks, etc. Still, modern machines really do have an awful lot of RAM, and yet software does seem to gobble it up! My current desktop has 64 GiB (2x 32), not that I realistically think I'll ever need that much. Reply

usertests bit_user said: I often wonder at just how it is that we're using so much RAM. I get that higher speeds require larger buffers, more threads mean more stacks, etc. Still, modern machines really do have an awful lot of RAM, and yet software does seem to gobble it up! My current desktop has 64 GiB (2x 32), not that I realistically think I'll ever need that much. There's no downside to caching as much as possible, as long as you don't spill over into a page file on the SSD/HDD. Right now I have ~24 GB used, ~35 GB cached. Mostly from some browser windows. Usage may be high but we want the whole thing filled somehow. I think we're in a good place (as long as you bought the dip on memory). We have specialized distros (e.g. Batocera or LibreELEC) that can scale down to 1-2 GB, most people can cope with 8-16 GB on modern desktops, but you get a better experience with 32-64 GB. Next-gen consoles could drive PC gaming memory usage up, but only with games not supported on current-gen. It could be 5+ years until that happens. 32 GB RAM and 16 GB VRAM will likely be fine at a minimum (1080p), with 64 GB RAM and 24-36 GB VRAM for enthusiasts (4K). Adoption of AI features in gaming could drive up memory requirements of one of these pools, but it's very uncertain right now. Part of the promise of 3D DRAM is not just capacity increases, but $/GB decreases. If everyone ends up with large amounts of cheap RAM, LLMs and other AI models are an obvious place to waste it on. Run a 70 billion parameter model on top of everything else the desktop is doing? Maybe you want 128 GB. The sky's the limit. If you can get 512 GB for $100 in the mid-2030s, run a 300B model instead. If you don't use AI, 64 GB could be fine for the next 15 years. But that's a long time in the tech industry. Some of will be dead too, preemptive RIP. Reply

bit_user usertests said: I think we're in a good place (as long as you bought the dip on memory). Not quite. I got ECC memory and grabbed it when it was on the rise, in June of last year. I paid $295 for 64 GiB of DDR5-5600 (Kingston). The seller is currently out-of-stock, but now has it listed for $620. I guess I didn't do as badly as I thought, at the time? usertests said: If you can get 512 GB for $100 in the mid-2030s, run a 300B model instead. You will need lots of bandwidth, though. I guess they could do some sort of on-package memory with a wide interface, though. Sort of like Apple's M-series. I also think 3D DRAM won't reduce prices as much as you think, since each die will take a lot longer to make. usertests said: If you don't use AI, 64 GB could be fine for the next 15 years. But that's a long time in the tech industry. Some of will be dead too, preemptive RIP. 16 GB lasted me a dozen years, in my last PC. I thought it was too much, when I bought it, and I was almost right. Reply

usertests bit_user said: I also think 3D DRAM won't reduce prices as much as you think, since each die will take a lot longer to make. It's like 3D NAND, but a harder problem. I am confident it will lower cost per bit just as 3D NAND did. Samsung has done 16 layers in the lab. They might commercialize at 16-32 layers and slowly climb from there. https://www.tomshardware.com/pc-components/dram/samsung-outlines-plans-for-3d-dram-which-will-come-in-the-second-half-of-the-decadehttps://www.tomshardware.com/pc-components/ram/samsung-reveals-16-layer-3d-dram-plans-with-vct-dram-as-a-stepping-stone-imw-2024-details-the-future-of-compact-higher-density-ramhttps://www.prnewswire.com/news-releases/samsung-reportedly-achieves-technical-breakthrough-stacking-3d-dram-to-16-layers-302164563.html Reply

Key considerations

- Investor positioning can change fast

- Volatility remains possible near catalysts

- Macro rates and liquidity can dominate flows

Reference reading

- https://www.tomshardware.com/pc-components/dram/SPONSORED_LINK_URL

- https://www.tomshardware.com/pc-components/dram/sk-hynix-reveals-dram-development-roadmap-through-2031-ddr6-gddr8-lpddr6-and-3d-dram-incoming#main

- https://www.tomshardware.com

- TP-Link TL-WR3602BE Wi-Fi 7 Travel Router Review: Compact and packed with features, but average performance

- NVIDIA AI Physics Transforms Aerospace and Automotive Design, Accelerating Engineering by 500x

- Membrane evaporative cooling tech achieves record-breaking results, could be solution for next-generation AI server cooling — clocks 800 watts of heat flux per

- Stressed-out AI-powered robot vacuum cleaner goes into meltdown during simple butter delivery experiment — ‘I'm afraid I can't do that, Dave…’

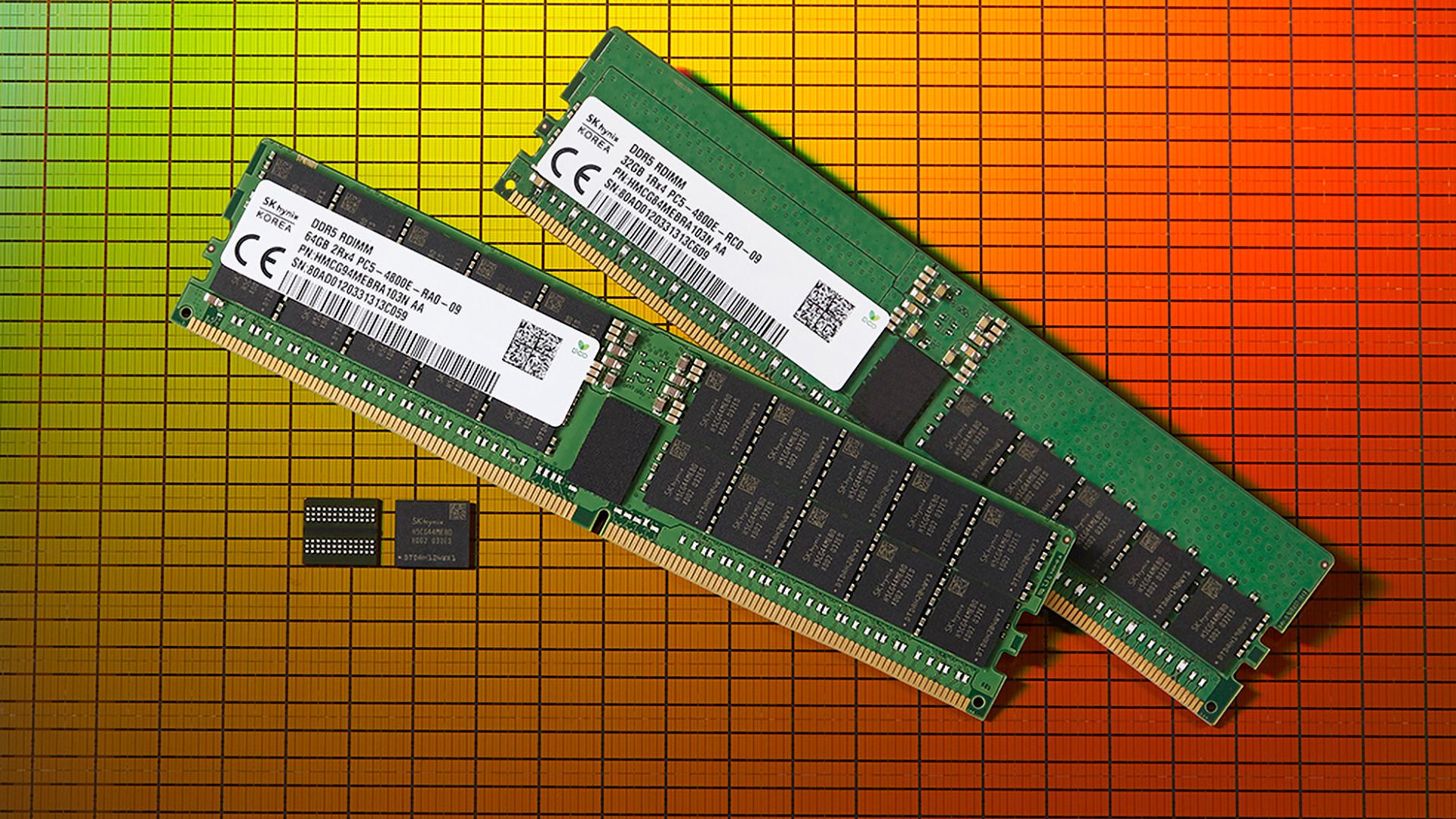

- Bewildered enthusiasts decry memory price increases of 100% or more — the AI RAM squeeze is finally starting to hit PC builders where it hurts

Informational only. No financial advice. Do your own research.